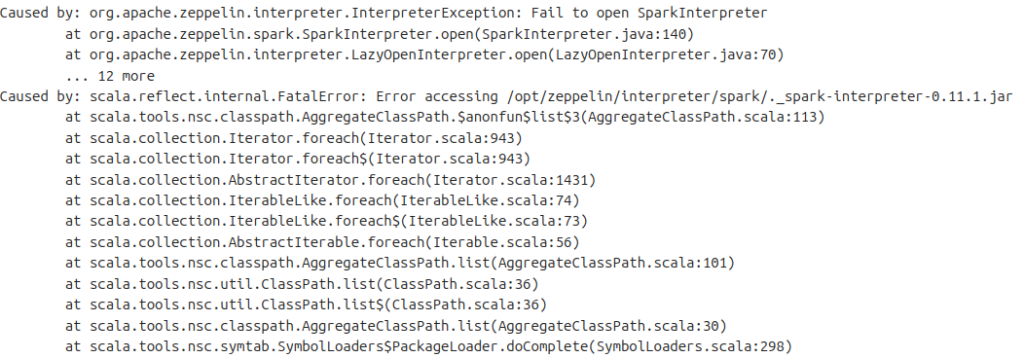

When installing Zeppelin 0.11.1 and Spark 3.3.3 over Docker in my github ( https://github.com/yodiaditya/docker-rapids-spark-zeppelin ) I receive error

Caused by: org.apache.zeppelin.interpreter.InterpreterException: Fail to open SparkInterpreter

at org.apache.zeppelin.spark.SparkInterpreter.open(SparkInterpreter.java:140)

at org.apache.zeppelin.interpreter.LazyOpenInterpreter.open(LazyOpenInterpreter.java:70)

... 12 more

Caused by: scala.reflect.internal.FatalError: Error accessing /opt/zeppelin/interpreter/spark/._spark-interpreter-0.11.1.jar

at scala.tools.nsc.classpath.AggregateClassPath.$anonfun$list$3(AggregateClassPath.scala:113)Apparently, the solution for this is very simple.

All you need just delete the file that cause the problem. In my case

rm /opt/zeppelin/interpreter/spark/._spark-interpreter-0.11.1.jar

rm /opt/zeppelin/interpreter/spark/scala-2.12/._spark-scala-2.12-0.11.1.jar

One reply on “Fix Zeppelin Spark-interpreter-0.11.1.jar and Scala”

oh man i did it and now i get the

“Fail to create interpreter, cause: java.lang.ClassNotFoundException: org.apache.zeppelin.spark.SparkInterpreter”