Fix the problem running Vertex AI local-run with GPU based training docker asia-docker.pkg.dev/vertex-ai/training/pytorch-gpu.2-3.py310:latest producing error with Transformer Trainer()

gcloud ai custom-jobs local-run --gpu --executor-image-uri=asia-docker.pkg.dev/vertex-ai/training/pytorch-gpu.2-3.py310:latest --local-package-path=YOUR_PYTHON_PACKAGE --script=YOUR_SCRIPT_PYTHON_FILEThe error appear

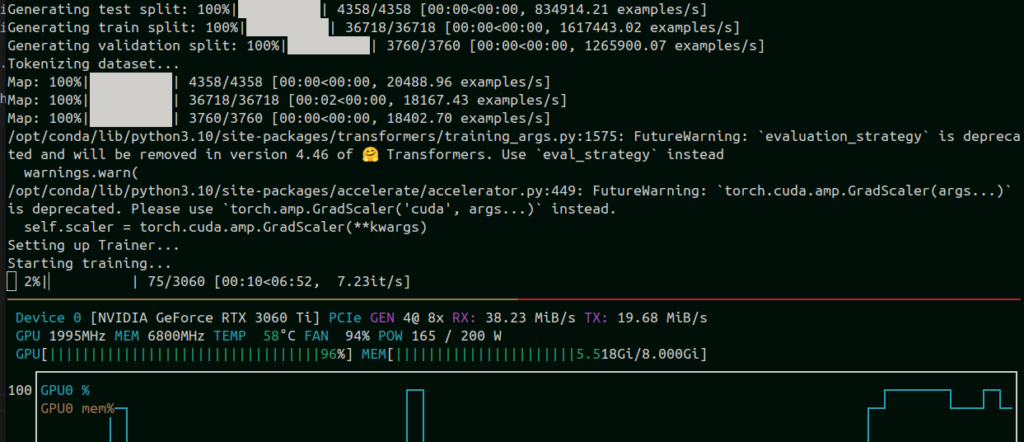

/opt/conda/lib/python3.10/site-packages/transformers/training_args.py:1575: FutureWarning: `evaluation_strategy` is deprecated and will be removed in version 4.46 of 🤗 Transformers. Use `eval_strategy` instead

warnings.warn(

Setting up Trainer...

Starting training...

0%| | 0/3060 [00:00<?, ?it/s]terminate called after throwing an instance of 'std::runtime_error'

what(): torch_xla/csrc/runtime/runtime.cc:31 : $PJRT_DEVICE is not set.

exit status 139

ERROR: (gcloud.ai.custom-jobs.local-run)

Docker failed with error code 139.

Command: docker run --rm --runtime nvidia -v -e --ipc host This problem what(): torch_xla/csrc/runtime/runtime.cc:31 : $PJRT_DEVICE is not set. apparently because the PyTorch issue.

When I change to tf-gpu.2-17.py310:latest the problem showing

RuntimeError: Failed to import transformers.trainer because of the following error (look up to see its traceback):

Failed to import transformers.integrations.integration_utils because of the following error (look up to see its traceback):

Failed to import transformers.modeling_tf_utils because of the following error (look up to see its traceback):

Your currently installed version of Keras is Keras 3, but this is not yet supported in Transformers. Please install the backwards-compatible tf-keras package with `pip install tf-keras`.

exit status 1

The solution is easy as change using tensorflow-GPU based training Vertex AI Docker.

Here are that work for latest transformers:

- asia-docker.pkg.dev/vertex-ai/training/tf-gpu.2-15.py310:latest

- and even tf-gpu.2-12.py310:latest works